Semantic Placement in Augmented Reality using MrEd

In this article we’re going to take a brief look at how we may want to think about placement of objects in Augmented Reality. We're going to use our recently released lightweight AR editing tool MrEd to make this easy to demonstrate.

Designers often express ideas in a domain appropriate language. For example a designer may say “place that chair on the floor” or “hang that photo at eye level on the wall”.

However when we finalize a virtual scene in 3d we often keep only the literal or absolute XYZ position of elements and throw out the original intent - the deeper reason why an object ended up in a certain position.

It turns out that it’s worth keeping the intention - so that when AR scenes are re-created for new participants or in new physical locations that the scenes still “work” - that they still are satisfying experiences - even if some aspects change.

In a sense this recognizes the Japanese term 'Wabi-Sabi'; that aesthetic placement is always imperfect and contends between fickle forces. Describing placement in terms of semantic intent is also similar to responsive design on the web or the idea of design patterns as described by Christopher Alexander.

Let’s look at two simple examples of semantic placement in practice.

1. Relative to the Ground

When you’re placing objects in augmented reality you often want to specify that those objects should be relationally placed in a position relative to other objects. A typical, in fact ubiquitous, example of placement is that often you want an object to be positioned relative to “the ground”.

Sometimes the designer's intent is to select the highest relative surface underneath the object in question (such as placing a lamp on a table) or at other times to select the lowest relative surface underneath an object (such as say placing a kitten on the floor under a table). Often, as well, we may want to express a placement in the air - such as say a mailbox, or a bird.

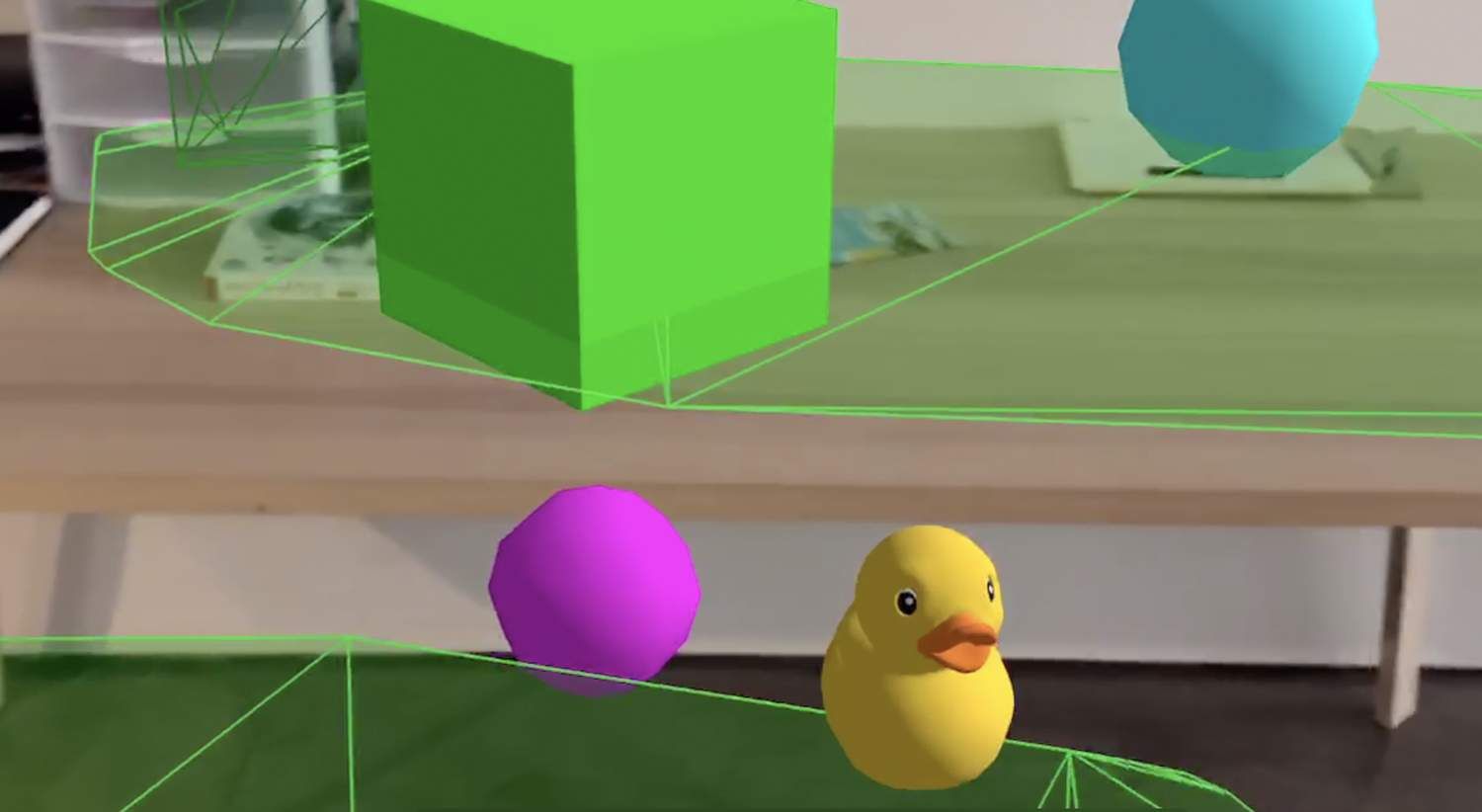

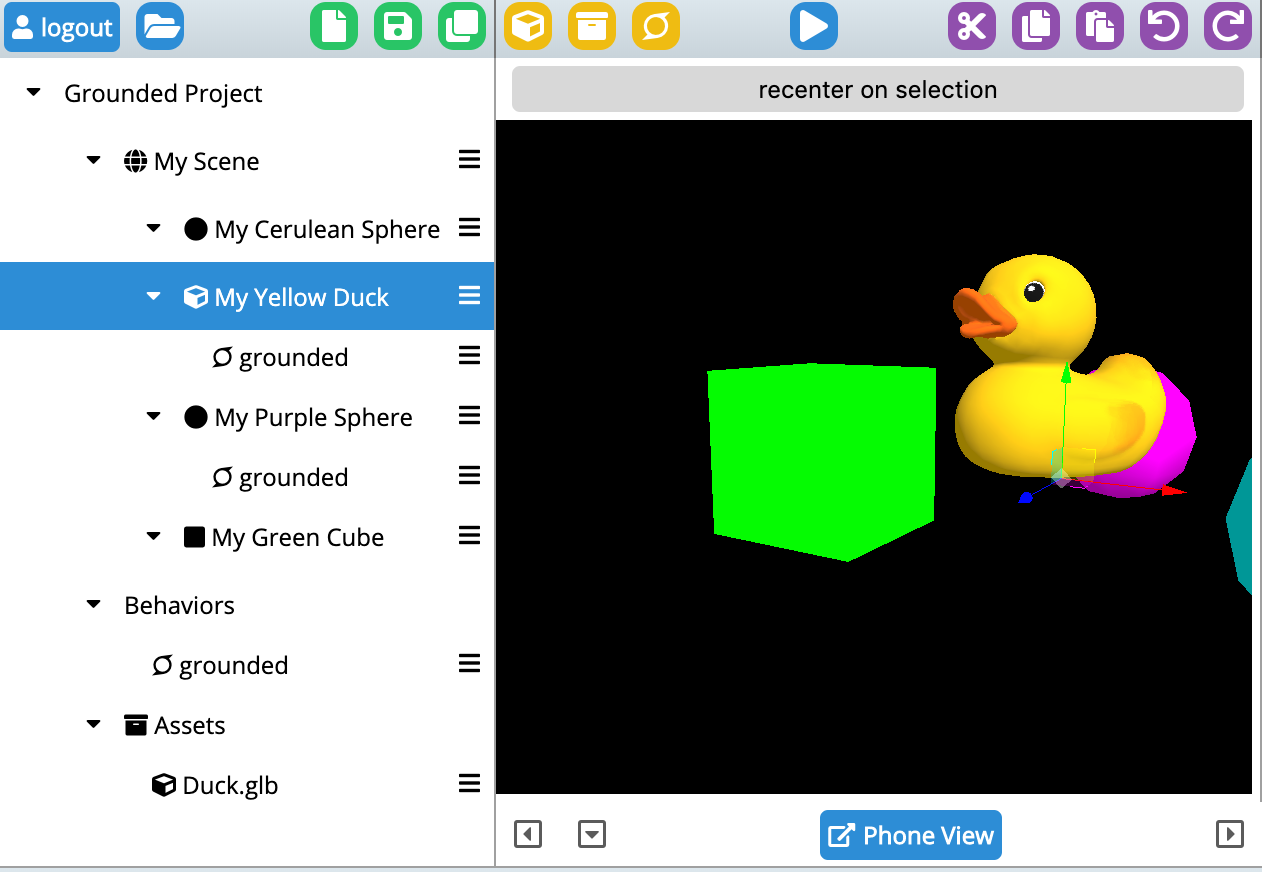

In this very small example I’ve attached a ground detection script to a duck, and then sprinkled a few other passive objects around the scene. As the ground is detected the duck will pop down from a default position to be offset relative to the ground (although still in the air). See the GIF above for an example of the effect.

To try this scene out yourself you will need WebXR for iOS which is a preview of emerging WebXR standards using iOS ARKit to expose augmented reality features in a browser environment. This is the url for the scene above in play mode (on a WebXR capable device):

https://painted-traffic.glitch.me/.mred/build/?mode=play&doc=doc_103575453

Here is what it should look like in edit mode:

You can also clone the glitch and edit the scene yourself (you’ll want to remember to set a password in the .env file and then login from inside MrEd). See:

https://glitch.com/edit/#!/painted-traffic

Here’s my script itself:

/// #title grounded

/// #description Stick to Floor/Ground - dynamically and constantly searching for low areas nearby

({

start: function(evt) {

this.sgp.startWorldInfo()

},

tick: function(e) {

let floor = this.sgp.getFloorNear({point:e.target.position})

if(floor) {

e.target.position.y = floor.y

}

}

})

This is relying on code baked into MrEd (specifically inside of findFloorNear() in XRWorldInfo.js if you really want to get detailed).

In the above example I begin by calling startWorldInfo() to start painting the ground planes (so that I can see them since it’s nice to have visual feedback). And, every tick, I call a floor finder subroutine which simply returns the best guess as to the floor in that area. The floor finder logic in this case is pre-defined but one could easily imagine other kinds of floor finding strategies that were more flexible.

2. Follow the player

Another common designer intent is to make sure that some content is always visible to the player. As designers in virtual or augmented reality it can be more challenging to direct a users attention to virtual objects. These are 3d immersive worlds, the player can be looking in any direction. Some kind of mechanic is needed to help make sure that the player sees what they need to see.

One common simple solution is to build an object that stays in front of the user. This can be itself a combination of multiple simpler behaviors. An object can be ordered to seek a position in front of the user, be at a certain height, and ideally billboarded so that any signage or message is always legible.

In this example a sign is decorated with two separate scripts, one to keep the sign in front of the player, and another to billboard the sign to face the player.

https://painted-traffic.glitch.me/.mred/build/?mode=edit&doc=doc_875751741&doctype=vr

Closing thoughts

We’ve only scratched the surface of the kinds of intent could be expressed or combined together. If you want to dive deeper there is a longer list in a separate article Laundry List of UX Patterns). I also invite you to help extend the industry; think both about what high level intentions you mean when you place objects and also how you'd communicate those intentions.

The key insight here is that preserving semantic intent means thinking of objects as intelligent, able to respond to simple high level goals. Virtual objects are more than just statues or art at a fixed position, but can be entities that can do your bidding, and follow high level rules.

Ultimately future 3d tools will almost certainly provide these kinds of services - much in the way CSS provides layout directives. We should also expect to see conventions emerge as more designers begin to work in this space. As a call to action, it's worth it to notice the high level intentions that you want, and to get the developers of the tools that you use to start to incorporate those intentions as primitives.