Visual Development in Hello WebXR!

This is a post that tries to cover many aspects of the visual design of our recently released demo Hello WebXR! (more information in the introductory post), targeting those who can create basic 3D scenes but want to find more tricks and more ways to build things, or simply are curious about how the demo was made visually. Therefore this is not intended to be a detailed tutorial or a dogmatic guide, but just a write-up of our decisions. End of the disclaimer :)

Here it comes a mash-up of many different topics presented in a brief way:

- Concept

- Pipeline

- Special Shaders and Effects

- Performance

- Sound Room

- Vertigo Room

- Conclusion

Concept

From the beginning, our idea was to make a simple, down-paced, easy to use experience that gathered many different interactions and mini-experiences that introduces VR newcomers to the medium, and also showcased the recently released WebXR API. It would run on almost any VR device but our main target device was the Oculus Quest, so we thought that we could have some mini-experiences that could share the same physical space, but other experiences should have to be moved to a different scene (room), either for performance reasons and also due its own nature.

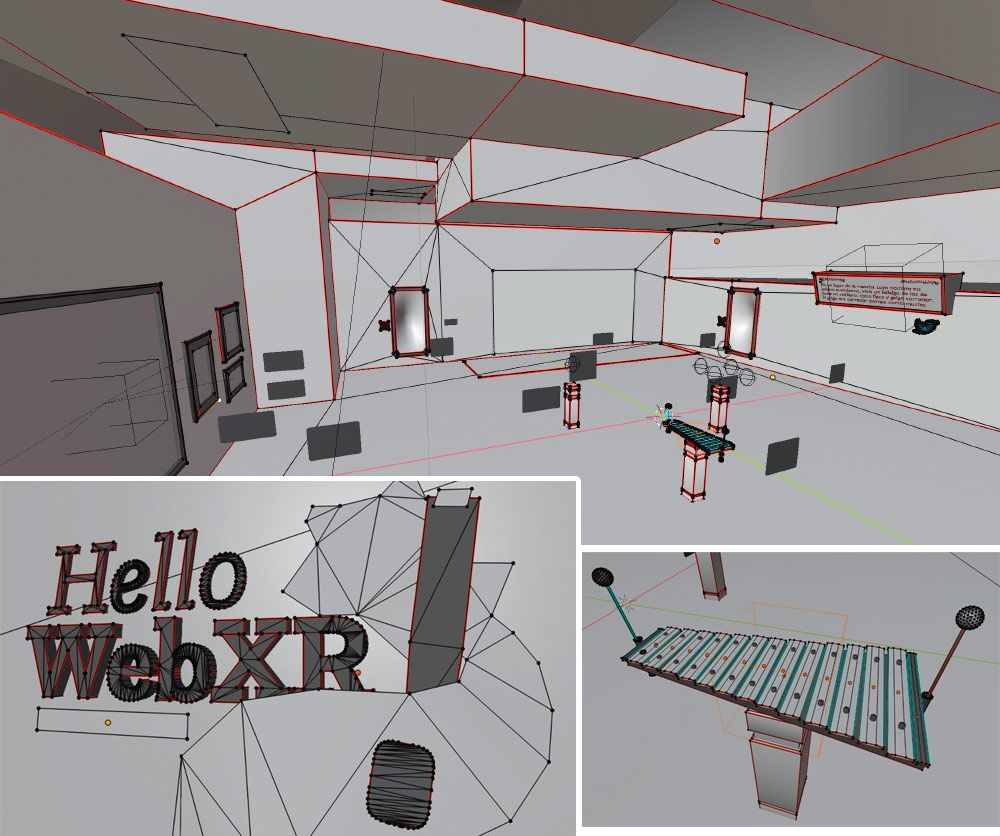

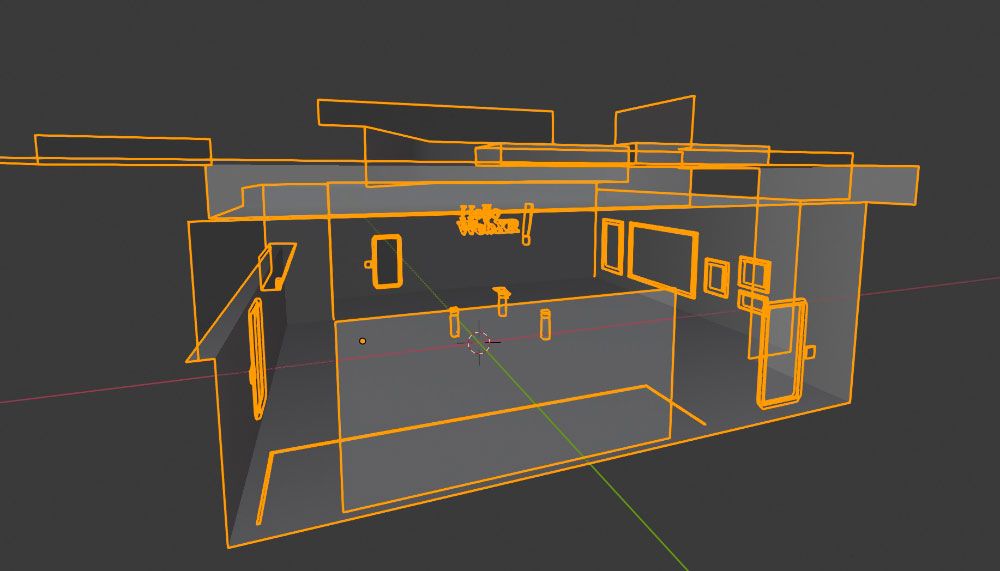

We started by gathering references and making concept art, to figure out how the "main hall" would look like:

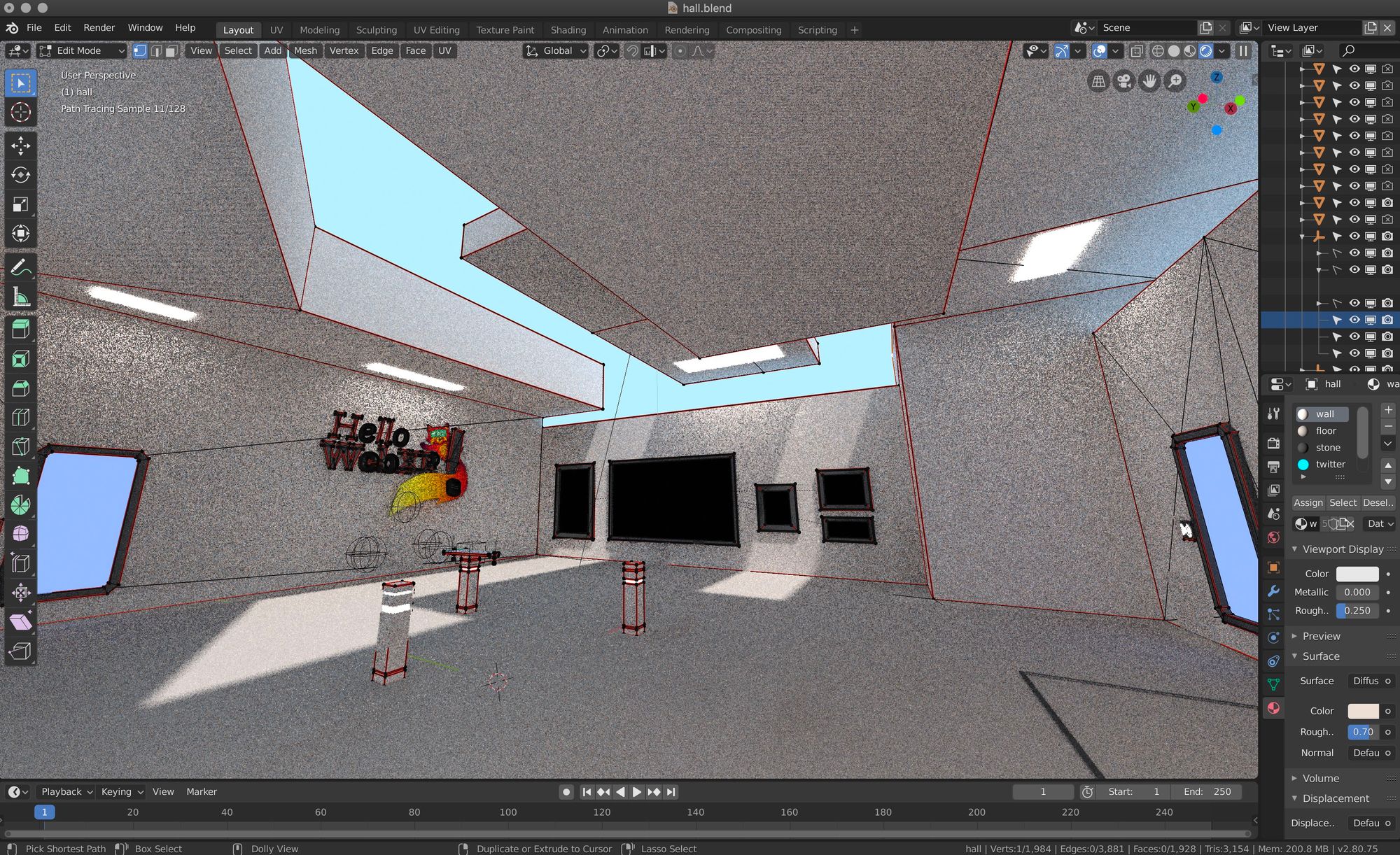

Then, we used Blender to start sketching the hall and test it on VR to see how it feels. It should have to be welcoming and nice, and kind of neutral to be suitable for all audiences.

Pipeline

3D models were exported to glTF format (Blender now comes with an exporter, and three.js provides a loader), and for textures PNG was used almost all the time, although on a late stage in the development of the demo all textures were manually optimized to drastically reduce the size of the assets. Some textures were preserved in PNG (handles transparency), others were converted to JPG, and the bigger ones were converted to BASIS using the basisu command line program. Ada Rose Cannon’s article introducing the format and how to use it is a great read for those interested.

glTF files were exported without materials, since they were created manually by code and assigned to the specific objects at load time to make sure we had the exact material we wanted and that we could also tweak easily.

In general, the pipeline was pretty traditional and simple. Textures were painted or tweaked using Photoshop. Meshes and lightmaps were created using Blender and exported to glTF and PNG.

For creating the lightmap UVs, and before unwrapping, carefully picked edges were marked as seams and then the objects were unwrapped using the default unwrapper, in the majority of cases. Finally, UVs were optimized with UVPackMaster 2 PRO.

Draco compression was also used in the case of the photogrammetry object, which reduced the size of the asset from 1.41MB to 683KB, less than a half.

Special Shaders and Effects

Some custom shaders were created for achieving special effects:

Beam shader

This was achieved offseting the texture along one axis and rendered in additive mode:

The texture is a simple gradient. Since it is rendered in additive mode, black turns transparent (does not add), and dark blue adds blue without saturating to white:

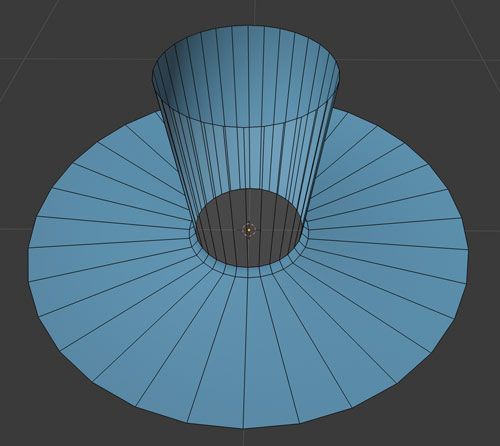

And the ray target is a curved mesh. The top cylinder and the bottom disk are seamlessly joined, but their faces and UVs go in opposite directions.

Door shader

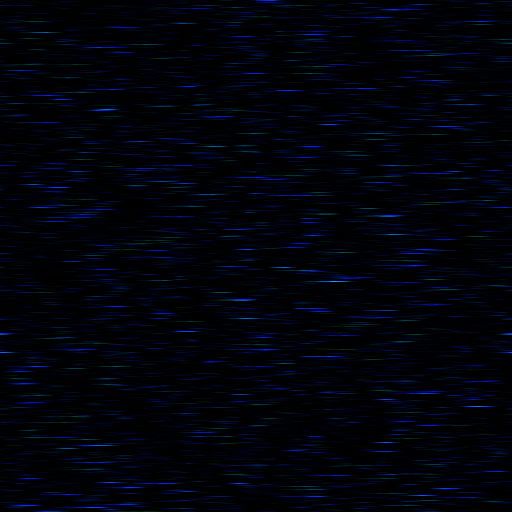

This is for the star field effect in the doors. The inward feeling is achieved by pushing the mesh from the center, and scaling it in Z when it is hovered by the controller’s ray:

This is the texture that is rendered in the shader using polar coordinates and added to a base blue color that changes in time:

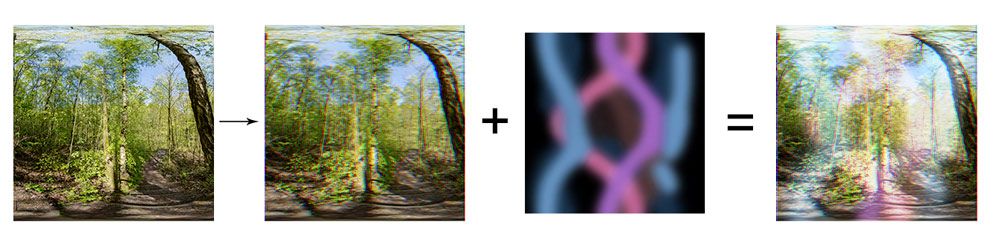

Panorama ball shader

Used in the deformation (in shape and color) of the panorama balls.

The halo effect is just a special texture summed to the landscape thumbnail, which is previously modified by shifting red channel to the left and blue channel to the right:

Zoom shader

Used in the zoom effect for the paintings, showing only a portion of the texture and also a white circular halo. The geometry is a simple plane, and the shader gets the UV coordinates of the raycast intersection to calculate the amount of texture to show in the zoom.

SDF Text shader

Text rendering was done using the Troika library, which turned out to be quite handy because it is able to render SDF text using only a url pointing to a TTF file, without having to generate a texture.

Performance

Oculus Quest is a device with mobile performance, and that requires a special approach when dealing with polygon count, complexity of materials and textures; different from what you could do for desktop or high end devices. We wanted the demo to perform smoothly and be indistinguishable from native or desktop apps, and these are some of the techniques and decisions we took to achieve that:

- We didn't want a low-poly style, but something neat and smooth. However, polygon count was reduced to the minimum within that style.

- Meshes were merged whenever it was possible. All static objects that could share the same material where merged and exported as a single mesh:

- Materials were simplified, reduced and reused. Almost all elements in the scene have a constant (unlit) material, and only two directional lights (sun and fill) are used in the scene for lighting the controllers. PBR materials were not used. Since constant materials cannot be lit, lightmaps must be precalculated to give the feeling of lighting. Lightmaps have two main advantages:

- Lighting quality can be superior to real time lighting, since the render is done “offline”. This is done beforehand, without any time constraint. This allows us to do full global illumination with path tracing in Blender, simulating light close to real life.

- Since no light calculations are done realtime, constant shading is the one that has the best performance: it just applies a texture to the model and nothing else.

However, lightmaps also have two main disadvantages:

- It is easy to get big, noticeable pixels or pixel noise in the texture when applied to the model (due to the insufficient resolution of the texture or to the lack of smoothness or detail in the render). This was solved by using 2048x2048 textures, rendered with an insane amount of samples (10,000 in our case since we didn’t have CUDA or Denoising available at that moment). 4096px textures were initially used and tested in Firefox Mixed Reality, but Oculus Browser did not seem to be able to handle them so we switched to 2048, reducing texture quality a bit but improving load time along the way.

- You cannot change the lighting dynamically, it must be static. This was not really an issue for us, since we did not need any dynamic lighting.

Sound Room

Each sound in the sound room is accompanied by a visual hint. These little animations are simple meshes animated using regular keyframes on position/rotation/scale transforms.

Vertigo Room

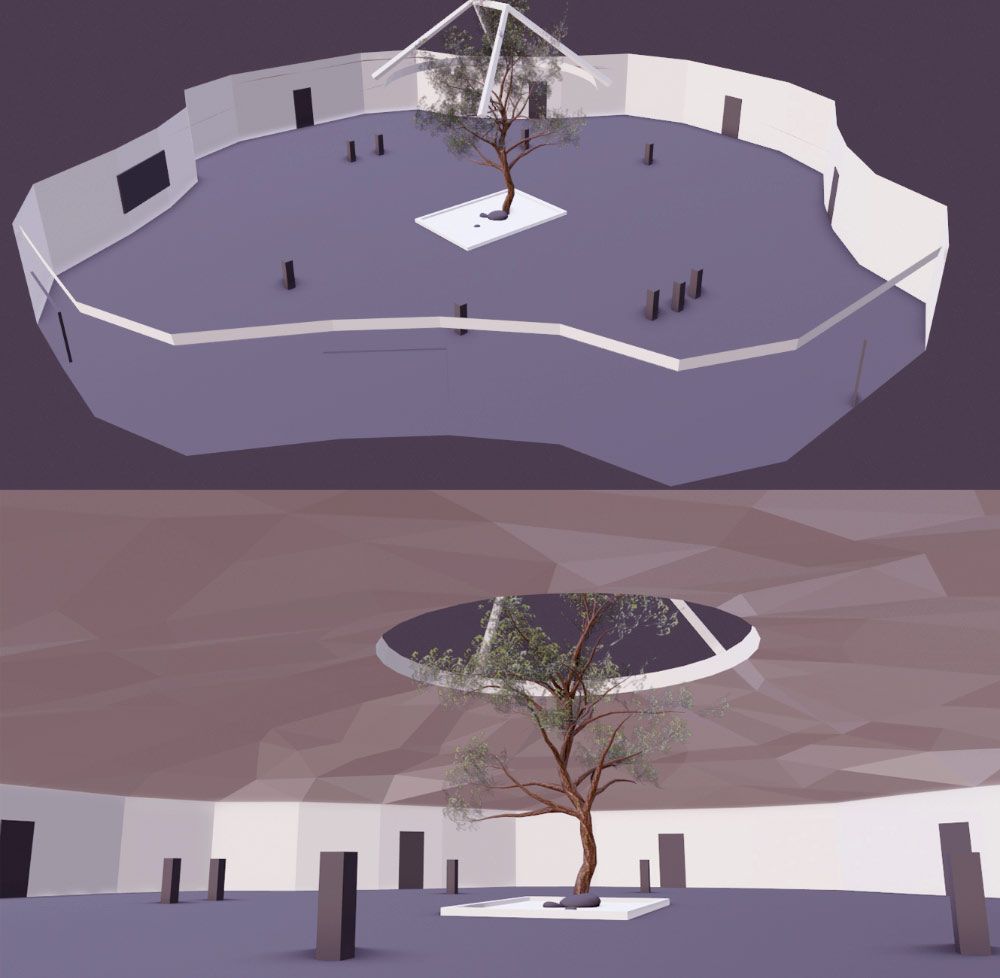

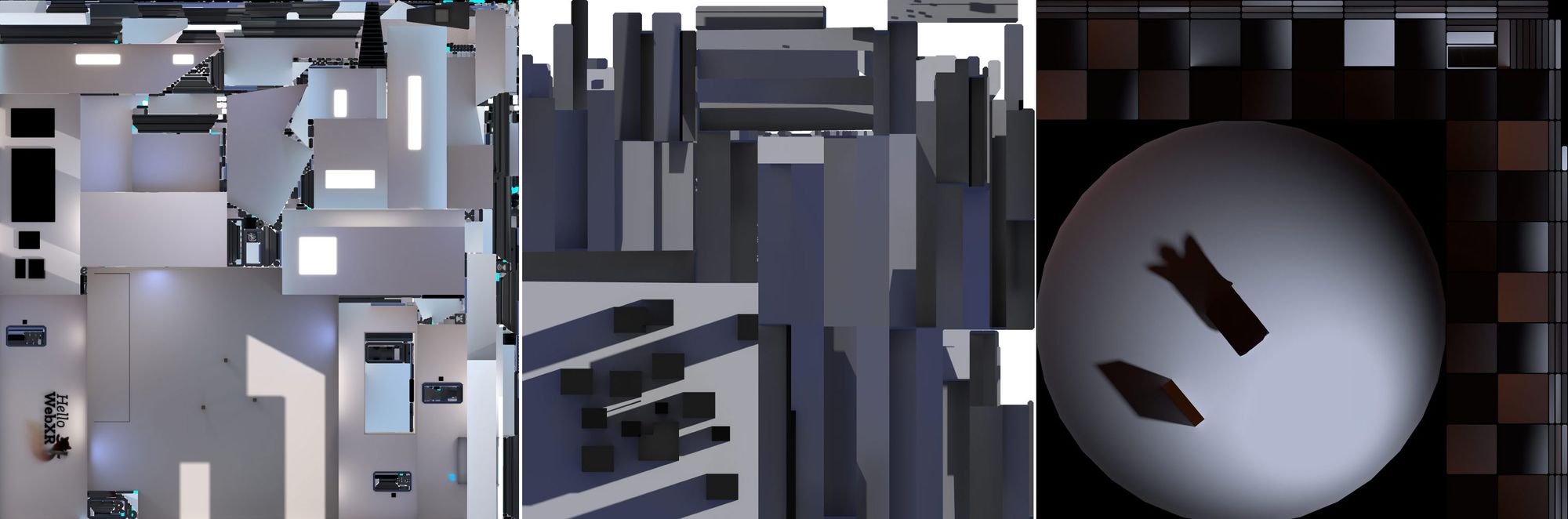

The first idea for the vertigo room was to build a low-poly but convincing city and put the user on top of a skyscraper. After some days of Blender work:

We tried this in VR, and to our surprise it did not produce vertigo! We tested different alternatives and modifications to the initial design without success. Apparently, you need more than just lifting the user to 500m to produce vertigo. Texture scale is crucial for this and we made sure they were at a correct scale, but there is more needed. Vertigo is about being in a situation of risk, and there were some factors in this scene that did not make you feel unsafe. Our bet is that the position and scale of the other buildings compared to the player situation make them feel less present, less physical, less tangible. Also, unrealistic lighting and texture may have influenced the lack of vertigo.

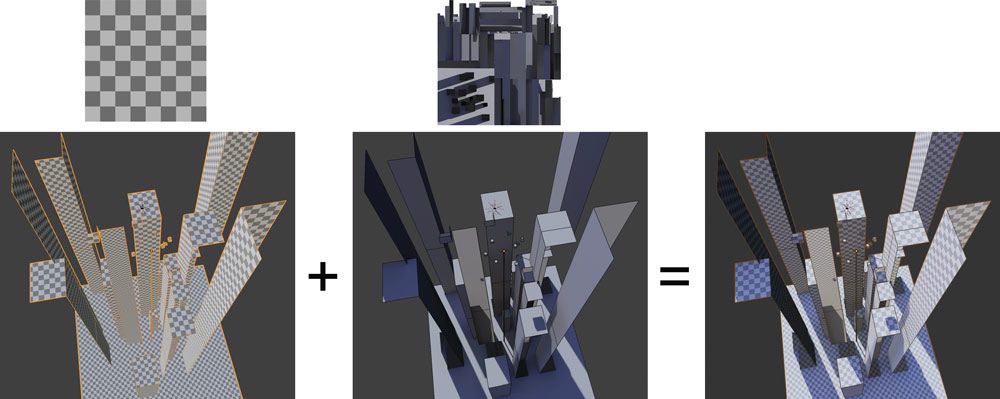

So we started another scene for the vertigo, focusing on the position and scale of the buildings, simplifying the texture to a simple checkerboard, and adding the user in a really unsafe situation.

The scene is comprised of only two meshes: the buildings and the teleport door. Since the range of movements of the user in this scene is very limited, we could remove all those sides of the buildings that face away from the center of the scene. It is a constant material with a repeated checkerboard texture to give sense of scale, and a lightmap texture that provides lighting and volume.

Conclusion

Things that did not go very well:

- We didn’t use the right hardware to render lightmaps, so it took 11 hours to render, which did not help us iterate quickly.

- Wasted a week refining the first version of the vertigo room without testing properly if the vertigo effect worked or not. We were overconfident about it.

- We had a tricky bug with Troika SDF text library on Oculus Browser for many days, which was finally solved thanks to its author.

- There is something obscure in the mipmapping of BASIS textures and the Quest. The level of mipmap chosen is always lower than it should, so textures look lower quality. This is noticeable when getting closer to the paintings from a distance, for example. We played with basisu parameters, but it was not of much help.

- There are still many improvements we can make to the pipeline to speed up content creation.

Things we like how it turned out:

- Visually it turned out quite clean and pleasing to the eye, without looking cheap despite using simple materials and reduced textures.

- The effort we put into merging meshes and simplifying materials was worth it, performance wise, the demo is very solid. Although we did not test while developing on lower end devices, we loved seeing that it runs smoothly on 3dof devices like Oculus Go and phones, and on all browsers.

- Despite some initial friction, new formats and technologies like BASIS or Draco work well and bring real improvements. If all textures were JPG or PNG, loading and starting times would be many times longer.

We uploaded the Blender files to the Hello WebXR repository.

If you want to know the specifics of something, do not hesitate to contact me at @feiss or the whole team at @mozillareality.

Thanks for reading!